A colleague of mine was assisting a customer with their AWS Connect implementation. The client AWS Connect solution uses AWS Lambda Functions to enrich the Contact Centre staff knowledge by providing additional customer details based on the telephone number making the call to the Contact Centre.

My colleague was updating and testing Python code locally on their laptop, then manually creating and deploying AWS Serverless Application Model (AWS SAM) packages which updated the Lambda Function. I wanted to improve the deployment process by removing some of the manual steps and maintaining a location where we could version control the Lambda code. This blog post is to demonstrate how we improved the deployment process using Terraform to build a Continuous Integration / Continuous Deployment (CI/CD) pipeline.

The two main AWS components of the solution are described below for some background knowledge.

AWS Connect is a cloud based Contact Centre service provided by AWS. It enables businesses to set up and manage a scalable customer Contact Centre in AWS cloud without the need for significant upfront investment in hardware or infrastructure.

AWS Lambda is another service provided by AWS (note, there are over 240 AWS services! ). It allows you to run your code without provisioning or managing servers (i.e it is a serverless computing solution). With Lambda, you can focus on writing and deploying your application code, while AWS handles the underlying infrastructure and scaling. AWS Lambda is a pay-per-use service which makes it great for integration with many other AWS services such as AWS Connect. AWS Lambda does offer a free tier if you want to have a play with the technology. The Lambda code can be written in a variety of programming languages, I’m using Python in this demonstration.

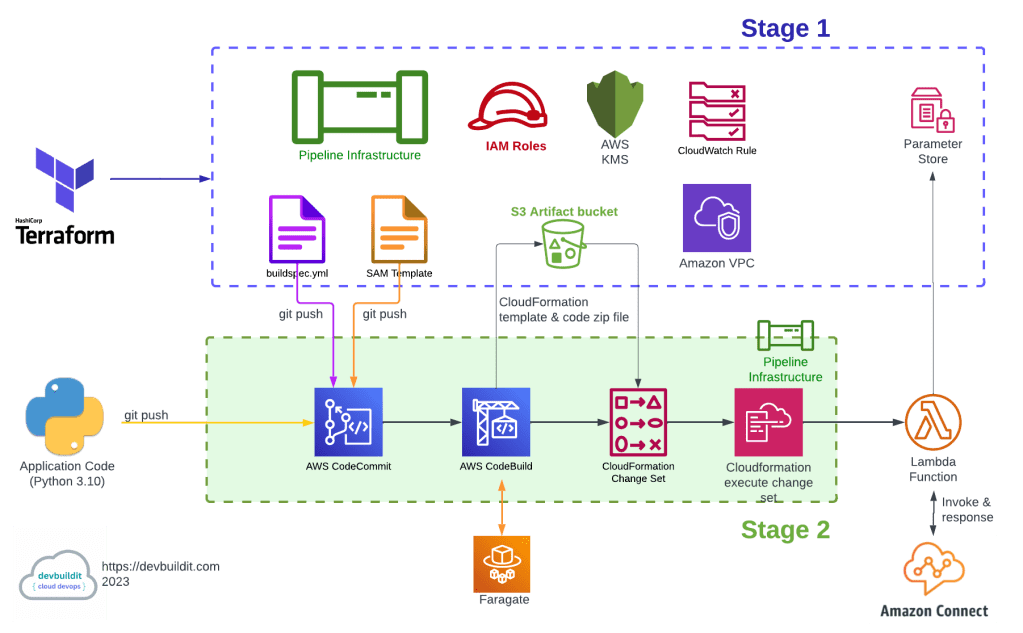

The solution is broken down into two parts, namely:

- Stage 1: One-off deployment of a CI/CD pipeline and supporting infrastructure using Terraform

- Stage 2: Build and deploy Lambda code via the CI/CD Pipeline

The Terraform code for this demonstration can be found here in a GitHub repository (https://github.com/arinzl/aws-lambda-cicdpipeline).

AWS provides a range of services that can be combined to create a robust CI/CD (Continuous Integration and Continuous Delivery) pipeline. The main components used in this demostration CI/CD pipeline include:

AWS CodeCommit: A fully managed source control service that hosts private Git repositories. It allows teams to collaborate on code and version control.

AWS CodeBuild: A fully managed build service that compiles source code, runs tests, and produces deployable artifacts. It integrates with various build tools and languages to build applications.

AWS CodeDeploy (not used in demostration): A deployment service that automates application deployments to a variety of compute services, including EC2 instances, AWS Fargate, AWS Lambda, and more. It allows for automated rolling updates and provides blue/green deployment capabilities.

AWS CloudFormation: A service that allows you to define and provision infrastructure as code (IaC). It helps in creating a repeatable and automated process for deploying and managing AWS resources.

AWS CodePipeline: A fully managed continuous delivery service that orchestrates and automates the release process. It helps in building, testing, and deploying code changes across multiple stages and environments. AWS Codepipeline is the glue that combines and orchestrates AWS CodeCommit, AWS CodeBuild, AWS CodeDeploy, AWS Cloudformation and artifact storage.

AWS CloudWatch: A monitoring and observability service that provides metrics, logs, and alarms for monitoring AWS resources and applications. It can be used to trigger events in the CI/CD pipeline based on specified conditions.

In Stage 1, the Terraform code deploys the following components:

- CI/CD Pipeline and supporting components (eg IAM roles, KMS, CloudWatch Event & SSM parameters etc)

- buildspec.yml file for building an environment and packaging the application code

- SAM Template file to build resources required for the deployment

In stage 2, the two files created in stage 1 (buildspec.yml and SAM template) are combined with the application code and fed into the pipeline via the CodeCommit repository which is the first component in the CI/CD pipeline. The CI/CD pipeline will run through the various stages and eventually deploy a Lambda function via AWS CloudFormation.

Looking more deeply at the CI/CD pipeline, the flow through the pipeline follows the process below:

- Code is pushed/uploaded to the AWS CodeCommit repository.

- A CloudWatch Event Rule will detect the commit into the AWS CodeCommit repository and will start the AWS CodePipeline.

- CodeBuild will create a zip file of the CodeCommit contents and copy it to the S3 artifact bucket in location <S3 bucket name>/source_out/.

- CodeBuild will invoke a CodeBuild project.

- The CodeBuild project will spawn a FarGate container node.

- The FarGate container node will extract the zip file from <S3 bucket name>/source_out/ into the container.

- The buildspec.yml file is executed within the FarGate container. In our case, a CloudFormation template is created by running “AWS Cloudformation package” command in the FarGate container.

- The build artifact (outputSamTemplate.yml) is zipped into a file in S3 location <S3 bucket name>/build_outp/.

- The Lambda code is zipped into the root of S3 bucket.

- Steps 3-9 are logged into S3 bucket <S3 bucket name>/build-log/ and CloudWatch.

- A CloudFormation Change Set is created using outputSamTemplate.yml and the code zip file located in the root of the artifact S3 bucket.

- CloudFormation Change set is executed and the Lambda function is deployed.

The files used in this demonstration can be found in the GitHub repository A summary of them is shown below. Note, the Terraform code is located in the subfolder ‘terraform’ and the application Lambda code is located in the subfolder ‘appfolder’.

build_buildspecfile.tf – builds buildspec.yml file used by CodeBuild

data "template_file" "buildspec" {

template = file("../buildspec.yml.tpl")

vars = {

s3bucket = module.artifact_bucket.s3_bucket_id

}

}

resource "local_file" "buildspec" {

filename = "${path.module}/../buildspec.yml"

content = data.template_file.buildspec.rendered

}

build_sam_template.tf – builds samTemplate.yml used by CodeBuild

ata "template_file" "sam_template" {

template = file("../samTemplate.yml.tpl")

vars = {

my_lambdarole = aws_iam_role.lambda.arn

my_app_name = var.app_name

my_layer_arn = var.request_layer_arn

my_ssm_parameter_store_key = var.ssm_parameter_store_key

}

}

resource "local_file" "sam_template" {

filename = "${path.module}/../samTemplate.yml"

content = data.template_file.sam_template.rendered

}

cloudwatch.tf – Cloudwatch event rule to detect changes to CodeCommit repository

resource "aws_cloudwatch_event_rule" "commit" {

name = "${var.app_name}-codecommit_push"

description = "Capture each push to the CodeCommit reposistory"

event_pattern = <<-EOF

{

"source": ["aws.codecommit"],

"detail-type": ["CodeCommit Repository State Change"],

"resources": ["${aws_codecommit_repository.lambda_repo.arn}"],

"detail": {

"referenceType": ["branch"],

"referenceName": ["${var.repo_branch}"]

}

}

EOF

}

resource "aws_cloudwatch_event_target" "pipeline" {

target_id = "${var.app_name}-pipeline-trigger"

rule = aws_cloudwatch_event_rule.commit.name

arn = aws_codepipeline.app_pipeline.arn

role_arn = aws_iam_role.cloudwatchtrigger.arn

}

data.tf – used to retrieve AWS Account ID

data "aws_caller_identity" "current" {}

data "aws_ami" "amazon-linux-2" {

most_recent = true

owners = ["amazon"]

name_regex = "amzn2-ami-hvm*"

}

iam.tf – IAM roles and permissions

#------------------------------------------------------------------------------

# Role for Codebuild to create artifacts

#------------------------------------------------------------------------------

resource "aws_iam_role" "codebuild" {

name = "${var.app_name}-codebuild-role"

assume_role_policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Principal": {

"Service": "codebuild.amazonaws.com"

}

}

]

}

EOF

}

resource "aws_iam_role_policy" "codebuild" {

name = "${var.app_name}-codebuild-policy"

role = aws_iam_role.codebuild.name

policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateNetworkInterface",

"ec2:DescribeDhcpOptions",

"ec2:DescribeNetworkInterfaces",

"ec2:DeleteNetworkInterface",

"ec2:DescribeSubnets",

"ec2:DescribeSecurityGroups",

"ec2:DescribeVpcs"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateNetworkInterfacePermission"

],

"Resource": "*",

"Condition": {

"StringEquals": {

"ec2:Subnet": [

"${module.codebuild_vpc.private_subnet_arns[0]}"

],

"ec2:AuthorizedService": "codebuild.amazonaws.com"

}

}

},

{

"Effect": "Allow",

"Action": [

"s3:*"

],

"Resource": [

"${module.artifact_bucket.s3_bucket_arn}",

"${module.artifact_bucket.s3_bucket_arn}/*"

]

},

{

"Effect": "Allow",

"Action": [

"kms:*"

],

"Resource": "${aws_kms_key.artifact_kms_key.arn}"

}

]

}

EOF

}

#------------------------------------------------------------------------------

# Role for CodePipeline

#------------------------------------------------------------------------------

resource "aws_iam_role" "codepipeline" {

name = "${var.app_name}-pipeline-role"

assume_role_policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "codepipeline.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOF

}

resource "aws_iam_role_policy" "codepipeline_role_policy" {

name = "${var.app_name}-pipeline-policy"

role = aws_iam_role.codepipeline.id

policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "ReadRepoAndContents",

"Effect": "Allow",

"Action": [

"codecommit:GetBranch",

"codecommit:GetCommit",

"codecommit:GetUploadArchiveStatus",

"codecommit:UploadArchive"

],

"Resource": "${aws_codecommit_repository.lambda_repo.arn}"

},

{

"Sid" : "CodeBuildActivities",

"Effect": "Allow",

"Action": [

"codebuild:BatchGetBuilds",

"codebuild:StartBuild"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateNetworkInterface",

"ec2:DescribeDhcpOptions",

"ec2:DescribeNetworkInterfaces",

"ec2:DeleteNetworkInterface",

"ec2:DescribeSubnets",

"ec2:DescribeSecurityGroups",

"ec2:DescribeVpcs"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateNetworkInterfacePermission"

],

"Resource": "*",

"Condition": {

"StringEquals": {

"ec2:Subnet": [

"${module.codebuild_vpc.private_subnet_arns[0]}"

],

"ec2:AuthorizedService": "codebuild.amazonaws.com"

}

}

},

{

"Effect": "Allow",

"Action": [

"s3:*"

],

"Resource": [

"${module.artifact_bucket.s3_bucket_arn}",

"${module.artifact_bucket.s3_bucket_arn}/*"

]

},

{

"Effect": "Allow",

"Action": [

"kms:*"

],

"Resource": "${aws_kms_key.artifact_kms_key.arn}"

},

{

"Effect": "Allow",

"Action": [

"cloudformation:CreateChangeSet",

"cloudformation:DeleteChangeSet",

"cloudformation:DescribeChangeSet",

"cloudformation:DescribeStacks",

"cloudformation:ExecuteChangeSet"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"iam:PassRole"

],

"Resource": "${aws_iam_role.cloudformation.arn}"

}

]

}

EOF

}

#------------------------------------------------------------------------------

# Role for lambda Execution Role

#------------------------------------------------------------------------------

resource "aws_iam_role" "lambda" {

name = "${var.app_name}-lambda-role"

assume_role_policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole",

"Effect": "Allow"

}

]

}

EOF

}

resource "aws_iam_role_policy" "lambda_role_policy" {

name = "${var.app_name}-lambda-policy"

role = aws_iam_role.lambda.id

policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "LambdaCloudwatchLogging",

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:*:${data.aws_caller_identity.current.account_id}:log-group:/aws/lambda/${var.app_name}:*"

},

{

"Sid": "LambdaSSMParameter",

"Effect": "Allow",

"Action": [

"ssm:GetParameter",

"ssm:GetParameters",

"ssm:GetParametersByPath"

],

"Resource": "arn:aws:ssm:*:${data.aws_caller_identity.current.account_id}:parameter/${var.ssm_parameter_store_key}"

}

]

}

EOF

}

#------------------------------------------------------------------------------

# Role for cloudwatch to trigger pipeline after repo commit

#------------------------------------------------------------------------------

resource "aws_iam_role" "cloudwatchtrigger" {

name = "${var.app_name}-cloudwatch-pipeline-trigger-role"

assume_role_policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "events.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOF

}

resource "aws_iam_role_policy" "trigger" {

name = "${var.app_name}-pipeline-trigger-policy"

role = aws_iam_role.cloudwatchtrigger.id

policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect":"Allow",

"Action": [

"codepipeline:StartPipelineExecution"

],

"Resource": [

"${aws_codepipeline.app_pipeline.arn}"

]

}

]

}

EOF

}

resource "aws_iam_role" "cloudformation" {

name = "${var.app_name}-cloudformation-role"

assume_role_policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Principal": {

"Service": "cloudformation.amazonaws.com"

},

"Action": "sts:AssumeRole",

"Effect": "Allow"

}

]

}

EOF

}

resource "aws_iam_role_policy" "cloudformation" {

name = "${var.app_name}-cloudformation-policy"

role = aws_iam_role.cloudformation.id

policy = <<-EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Resource": "arn:aws:logs:*:*:*",

"Action": "logs:*",

"Effect": "Allow"

},

{

"Resource": [

"${module.artifact_bucket.s3_bucket_arn}",

"${module.artifact_bucket.s3_bucket_arn}/*"

],

"Action": [

"s3:GetObject",

"s3:GetObjectVersion",

"s3:GetBucketVersioning",

"s3:PutObject"

],

"Effect": "Allow"

},

{

"Resource": "arn:aws:lambda:*",

"Action": [

"lambda:*"

],

"Effect": "Allow"

},

{

"Resource": "arn:aws:apigateway:ap-southeast-2::*",

"Action": [

"apigateway:*"

],

"Effect": "Allow"

},

{

"Resource": "arn:aws:iam:::*",

"Action": [

"iam:AttachRolePolicy",

"iam:DeleteRolePolicy",

"iam:DetachRolePolicy",

"iam:GetRole",

"iam:CreateRole",

"iam:DeleteRole",

"iam:PutRolePolicy"

],

"Effect": "Allow"

},

{

"Resource": "*",

"Action": [

"iam:PassRole"

],

"Effect": "Allow"

},

{

"Resource": "arn:aws:cloudformation:ap-southeast-2:aws:transform/Serverless-2016-10-31",

"Action": [

"cloudformation:CreateChangeSet"

],

"Effect": "Allow"

},

{

"Resource": "arn:aws:codedeploy:ap-southeast-2::application:*",

"Action": [

"codedeploy:CreateApplication",

"codedeploy:DeleteApplication",

"codedeploy:RegisterApplicationRevision"

],

"Effect": "Allow"

},

{

"Resource": "arn:aws:codedeploy:ap-southeast-2::deploymentgroup:*",

"Action": [

"codedeploy:CreateDeploymentGroup",

"codedeploy:CreateDeployment",

"codedeploy:GetDeployment"

],

"Effect": "Allow"

},

{

"Resource": "arn:aws:codedeploy:ap-southeast-2::deploymentconfig:*",

"Action": [

"codedeploy:GetDeploymentConfig"

],

"Effect": "Allow"

}

]

}

EOF

}

kms.tf – KMS key for data encryption at rest

resource "aws_kms_key" "artifact_kms_key" {

description = "KMS Keys Artifact bucket for Encryption"

is_enabled = true

enable_key_rotation = true

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Enable IAM User Permissions",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::${data.aws_caller_identity.current.account_id}:root"

},

"Action": "kms:*",

"Resource": "*"

},

{

"Sid": "Allow access for Key Administrators",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::${data.aws_caller_identity.current.account_id}:root"

},

"Action": [

"kms:Create*",

"kms:Describe*",

"kms:Enable*",

"kms:List*",

"kms:Put*",

"kms:Update*",

"kms:Revoke*",

"kms:Disable*",

"kms:Get*",

"kms:Delete*",

"kms:TagResource",

"kms:UntagResource",

"kms:ScheduleKeyDeletion",

"kms:CancelKeyDeletion"

],

"Resource": "*"

},

{

"Sid": "Allow use of the key",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::${data.aws_caller_identity.current.account_id}:root"

]

},

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:DescribeKey"

],

"Resource": "*"

},

{

"Sid": "Allow attachment of persistent resources",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::${data.aws_caller_identity.current.account_id}:root"

]

},

"Action": [

"kms:CreateGrant",

"kms:ListGrants",

"kms:RevokeGrant"

],

"Resource": "*",

"Condition": {

"Bool": {

"kms:GrantIsForAWSResource": "true"

}

}

}

]

}

EOF

}

resource "aws_kms_alias" "app_kms_alias" {

target_key_id = aws_kms_key.artifact_kms_key.key_id

name = "alias/${var.app_name}"

}

pipeline.tf – CodePipeline Infrastructure

resource "aws_codecommit_repository" "lambda_repo" {

repository_name = "${var.app_name}-repo"

description = "Lambad Repository for ${var.app_name}"

default_branch = var.repo_branch

}

resource "aws_codebuild_project" "codebuild" {

depends_on = [module.artifact_bucket]

name = "${var.app_name}-codebuildproject"

build_timeout = "5"

service_role = aws_iam_role.codebuild.arn

encryption_key = aws_kms_key.artifact_kms_key.arn

artifacts {

type = "CODEPIPELINE"

}

environment {

compute_type = "BUILD_GENERAL1_SMALL"

image = "aws/codebuild/standard:1.0"

type = "LINUX_CONTAINER"

image_pull_credentials_type = "CODEBUILD"

}

logs_config {

cloudwatch_logs {

group_name = "/aws/${var.app_name}/build"

stream_name = "${var.app_name}build-log-stream"

}

s3_logs {

status = "ENABLED"

location = "${module.artifact_bucket.s3_bucket_id}/build-log"

}

}

source {

type = "CODEPIPELINE"

}

source_version = var.repo_branch

vpc_config {

vpc_id = module.codebuild_vpc.vpc_id

subnets = module.codebuild_vpc.private_subnets

security_group_ids = [

aws_security_group.codebuild.id

]

}

}

resource "aws_codepipeline" "app_pipeline" {

name = "${var.app_name}-pipeline"

role_arn = aws_iam_role.codepipeline.arn

artifact_store {

location = module.artifact_bucket.s3_bucket_id

type = "S3"

}

stage {

name = "Source"

action {

name = "Source"

category = "Source"

owner = "AWS"

provider = "CodeCommit"

input_artifacts = []

version = "1"

output_artifacts = ["source_output"]

configuration = {

RepositoryName = "${var.app_name}-repo" #local.permission_sets_repository_name

BranchName = var.repo_branch

PollForSourceChanges = false

}

}

}

stage {

name = "Build"

action {

name = "Build"

category = "Build"

owner = "AWS"

provider = "CodeBuild"

input_artifacts = ["source_output"]

output_artifacts = ["build_output"]

version = "1"

configuration = {

ProjectName = aws_codebuild_project.codebuild.id

}

}

}

stage {

name = "CreateChangeSet_TEST"

action {

name = "GenerateChangeSet"

category = "Deploy"

owner = "AWS"

provider = "CloudFormation"

input_artifacts = ["build_output"]

version = "1"

configuration = {

ActionMode = "CHANGE_SET_REPLACE"

Capabilities = "CAPABILITY_IAM"

ChangeSetName = var.app_name

RoleArn = aws_iam_role.cloudformation.arn

StackName = "${var.app_name}-DEV"

TemplatePath = "build_output::outputSamTemplate.yml"

}

}

}

stage {

name = "DeployChangeSet_TEST"

action {

name = "ExecuteChangeSet"

category = "Deploy"

owner = "AWS"

provider = "CloudFormation"

input_artifacts = ["build_output"]

version = "1"

configuration = {

ActionMode = "CHANGE_SET_EXECUTE"

Capabilities = "CAPABILITY_IAM"

ChangeSetName = var.app_name

RoleArn = aws_iam_role.cloudformation.arn

StackName = "${var.app_name}-DEV"

TemplatePath = "build_output::outputSamTemplate.yml"

}

}

}

}

provider.tf – Terraform definition file

provider "aws" {

region = var.region

}

s3.tf – Artifact S3 bucket

locals {

account_id = data.aws_caller_identity.current.account_id

}

module "artifact_bucket" {

source = "terraform-aws-modules/s3-bucket/aws"

version = "3.11.0"

bucket = "${var.app_name}-artifact-${local.account_id}"

versioning = {

enabled = false

}

}

resource "aws_s3_bucket_policy" "artifact_bucket_policy" {

bucket = module.artifact_bucket.s3_bucket_id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Sid = "pipeline-artifact-access"

Effect = "Allow"

Principal = {

"AWS" = "arn:aws:iam::${local.account_id}:root"

}

Action = [

"s3:*",

]

Resource = [

"arn:aws:s3:::${module.artifact_bucket.s3_bucket_id}",

"arn:aws:s3:::${module.artifact_bucket.s3_bucket_id}/*"

]

},

]

})

}

resource "aws_s3_bucket_lifecycle_configuration" "example" {

bucket = module.artifact_bucket.s3_bucket_id

rule {

id = "rule-1"

abort_incomplete_multipart_upload {

days_after_initiation = 6

}

status = "Enabled"

}

}

resource "aws_s3_bucket_server_side_encryption_configuration" "example" {

bucket = module.artifact_bucket.s3_bucket_id

rule {

apply_server_side_encryption_by_default {

kms_master_key_id = aws_kms_key.artifact_kms_key.arn

sse_algorithm = "aws:kms"

}

}

}

security_groups.tf – security groups for FarGate usage

resource "aws_security_group" "codebuild" {

name_prefix = var.app_name

description = "Allow outbound traffic to allow download images and dependencies for codebuild"

vpc_id = module.codebuild_vpc.vpc_id

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

ssm_parameters.tf – parameter store value to be read by Lambda Function

resource "aws_ssm_parameter" "lambda_app_target_url" {

description = "test location for web request"

name = "/${var.ssm_parameter_store_key}"

type = "String"

value = "https://replaceme.com/api"

lifecycle {

ignore_changes = [

value

]

}

}

terraform.tfvars – Terraform variable values

region = "ap-southeast-2"

app_name = "apicaller"

vpc_cidr_range = "172.17.0.0/20"

private_subnets_list = ["172.17.1.0/24"]

public_subnets_list = ["172.17.15.0/24"]

#app_folder = "appfolder"

repo_branch = "main"

ssm_parameter_store_key = "apicaller/url"

request_layer_arn = "arn:aws:lambda:ap-southeast-2:484673417484:layer:request3_10_mac:1"

variable.tf – Terraform variable definition file

variable "region" {

description = "AWS Region"

type = string

}

variable "app_name" {

description = "Applicaiton Name"

type = string

}

variable "vpc_cidr_range" {

type = string

}

variable "private_subnets_list" {

description = "Private subnet list for infrastructure"

type = list(string)

}

variable "public_subnets_list" {

description = "Public subnet list for infrastructure"

type = list(string)

}

variable "repo_branch" {

description = "Repo Branch Name"

type = string

}

variable "request_layer_arn" {

description = "lambda python 3.10 request layer name"

type = string

}

variable "ssm_parameter_store_key" {

description = "location of ssm parameter (without / prefix)"

type = string

}

vpc.tf – VPC for FarGate usage

#------------------------------------------------------------------------------

# VPC Module

#------------------------------------------------------------------------------

module "codebuild_vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "4.0.1"

name = "codebuild-${var.app_name}-vpc"

cidr = var.vpc_cidr_range

azs = ["${var.region}a"]

private_subnets = var.private_subnets_list

public_subnets = var.public_subnets_list

#database_subnets = var.database_subnets_list

enable_flow_log = false

create_flow_log_cloudwatch_log_group = false

create_flow_log_cloudwatch_iam_role = false

#vpc_flow_log_permissions_boundary = aws_iam_policy.vpc_flow_logging_boundary_role_policy.arn

flow_log_max_aggregation_interval = 60

create_igw = true

enable_nat_gateway = true

enable_ipv6 = false

enable_dns_hostnames = true

enable_dns_support = true

}

Ideally, I would add a testing and approval stage into the pipeline and we should deploy the Lambda Function into a separate TEST and PRODUCTION account. However, to demonstrate the key components (CI/CD pipeline) these additional components are omitted in this blog for clarity. Hopefully this post has improved your insight into using CI/CD pipelines.

[…] get predictions from our model. An earlier blog on how to create an AWS Lamba function can be found here (https://devbuildit.com/2023/06/15/aws-lambda-deployment-via-ci-cd-pipeline-and-terraform/) if you […]

LikeLike